Information Retrieval

What is Information Retrieval?

Information retrieval is the process of searching for information in a collection of documents. The goal of information retrieval is to find documents that are relevant to the user’s query.

Key components and concepts of Information Retrieval include:

- Query: A query is a formal request for information. It can be a set of keywords, a natural language question, or any other means by which a user expresses their information need.

- Document: In the context of information retrieval, a document is a unit of information. It could be a web page, an article, a book, an image, or any other piece of content that contains information.

- Relevance: The concept of relevance is central to information retrieval. Relevance indicates how well a document or data matches the user’s information need. Retrieval systems aim to present the most relevant information first.

- Indexing: To facilitate efficient retrieval, documents are often preprocessed and indexed. Indexing involves creating a structured representation of the content, making it easier and faster to search through large collections.

- Retrieval Models: Various retrieval models and algorithms are employed to rank and present documents based on their relevance to a given query. Popular models include the Vector Space Model and probabilistic models.

- Evaluation: Information retrieval systems are evaluated based on criteria such as precision, recall, and F1 score. Precision measures the accuracy of the retrieved results, recall measures the system’s ability to find all relevant documents, and the F1 score is a combination of precision and recall.

- User Interaction: Information retrieval systems often involve user interaction, allowing users to refine queries, provide feedback on relevance, and iteratively improve the retrieval process.

Information Retrieval is a broad field with applications in web search engines, document management systems, digital libraries, and various domains where efficiently finding and accessing relevant information is crucial.

Boolean Retrieval

What is Boolean Retrieval?

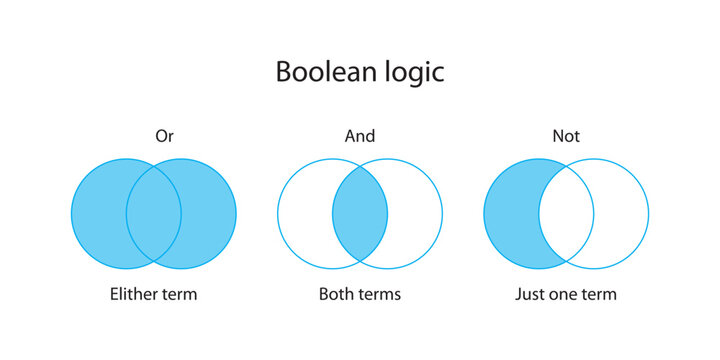

Boolean retrieval is a retrieval model that supports queries that contain boolean operators, such as AND, OR, and NOT. For example, cat AND dog means that the document must contain both cat and dog. cat OR dog means that the document must contain either cat or dog. cat NOT dog means that the document must contain cat but not dog.

Advantages and Disadvantages of Boolean Retrieval

The advantage of this retrieval method lies in its simplicity and intuitiveness, allowing users to precisely define their queries by combining keywords. However, it has limitations, such as the inability to handle semantic relationships between query terms and the lack of consideration for the importance of words. In modern information retrieval systems, a combination of Boolean Retrieval and other techniques, such as the vector space model and text analysis, is often employed to enhance the quality and effectiveness of search results.

Term Vocabulary

In Information Retrieval (IR), term vocabulary refers to the collection of unique terms or terms of significance that are extracted from a document collection for the purpose of indexing and searching. The term vocabulary plays a crucial role in creating inverted indexes, which are data structures that map terms to the documents in which they appear. Here are some key concepts related to term vocabulary in IR:

- Token: A token is a sequence of characters that are treated as a unit. In the context of IR, tokens are typically words, but they can also be phrases, numbers, or other units of interest. Tokenization is the process of breaking a document into tokens. Token normalization is the process of converting tokens to a standard form, which is called a term, such as converting all tokens to lowercase, removing punctuation and stop words, etc.

- Term: A term is a word or a sequence of words that represents a concept or an idea. In the context of IR, terms are the basic units used for indexing and searching.

- Lexicon: The lexicon is the complete set of terms present in a document collection. It encompasses all unique terms found in the documents and serves as the foundation for building the term vocabulary.

- Document Frequency (DF): The document frequency of a term refers to the number of documents in a collection that contain that particular term. Terms with high document frequency may be less discriminative for retrieval purposes.

- Collection Frequency (CF): The collection frequency of a term is the total number of occurrences of that term across all documents in the collection. It provides information about the overall importance or prevalence of a term in the entire collection.

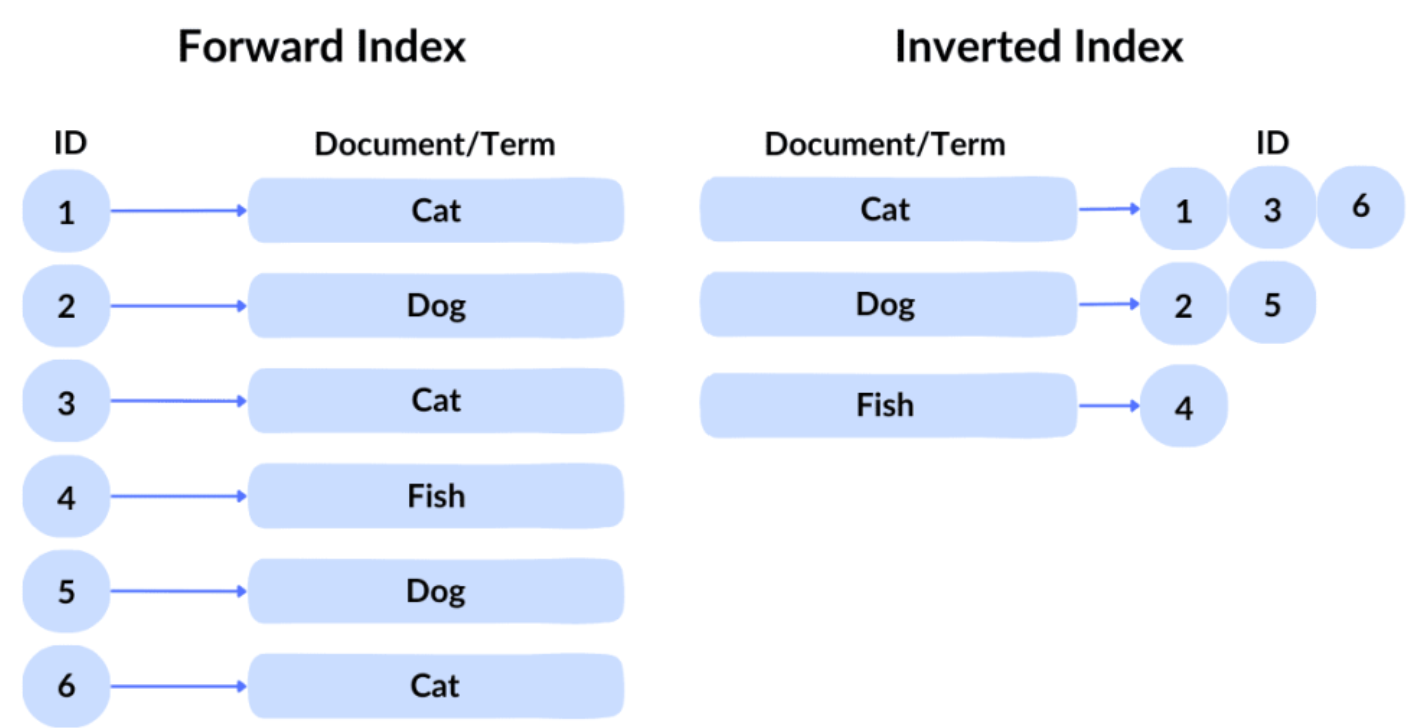

- Inverted Index: An inverted index is a data structure that maps terms to the documents in which they occur. It is created during the indexing phase and is used to speed up the retrieval process by allowing for efficient term lookup.

- Stop Words: Stop words are common words (e.g., “and,” “the,” “is”) that are often excluded from the term vocabulary during indexing because they are considered less informative for retrieval.

- Stemming: Stemming is the process of reducing words to their root or base form. It helps in consolidating variations of a term into a single representation. For example, “running” and “runner” might both be stemmed to “run.”

- Term Weighting: Term weighting assigns weights to terms based on their importance in a document or collection. Commonly used techniques include Term Frequency-Inverse Document Frequency (TF-IDF) weighting.

Inverted Index

An inverted index is a data structure used in information retrieval to efficiently map terms or keywords to the documents or records in which they occur. The term “inverted” refers to the reversal of the relationship between terms and documents compared to a traditional forward index, where documents are indexed by terms.

Skip List

Query Processing

class Doc:

def __init__(self, doc_id, skip_id=None):

self.doc_id = doc_id # doc id

self.skip_id = skip_id # skip pointer

class Record:

def __init__(self, term_id, docs: dict):

self.term_id = term_id # term id

self.docs = docs # {doc_id: Doc}

self.record = sorted(list(docs.keys())) # sorted doc ids

@property

def df(self):

return len(self.record) # document frequency

def i_th_doc(self, i):

return self.docs[self.record[i]] # i-th document

class InvertedIndex:

def __init__(self):

self.records = dict() # term_id -> Record

def add(self, term_id, docs):

if term_id not in self.records: # new term

self.records[term_id] = Record(term_id, docs) # create new record

def merge2(self, term_1, term_2, skip=False, _and=True):

if term_1 in self.records and term_2 in self.records: # both terms exist

idx_1 = 0 # index of term_1

idx_2 = 0 # index of term_2

res = [] # result

while (

idx_1 < self.records[term_1].df and

idx_2 < self.records[term_2].df

):

doc_1 = self.records[term_1].i_th_doc(idx_1) # doc of term_1

doc_2 = self.records[term_2].i_th_doc(idx_2) # doc of term_2

if doc_1.doc_id == doc_2.doc_id: # same doc

res.append(doc_1.doc_id) # add to result

idx_1 += 1 # move to next doc of term_1

idx_2 += 1 # move to next doc of term_2

elif doc_1.doc_id < doc_2.doc_id: # doc_1 < doc_2

if _and:

if skip: # use skip pointer

if (

doc_1.skip_id is not None

and doc_1.skip_id <= doc_2.doc_id

): # skip

while (

self.records[term_1].record[idx_1]

< doc_1.skip_id

):

idx_1 += 1

else: # no skip pointer

idx_1 += 1 # move to next doc of term_1

else: # OR

res.append(doc_1.doc_id) # add to result

idx_1 += 1 # move to next doc of term_1

else: # doc_1 > doc_2

if _and:

if skip: # use skip pointer

if (

doc_2.skip_id is not None

and doc_2.skip_id <= doc_1.doc_id

): # skip

while (

self.records[term_2].record[idx_2]

< doc_2.skip_id

):

idx_2 += 1

else: # no skip pointer

idx_2 += 1 # move to next doc of term_2

else: # OR

res.append(doc_2.doc_id) # add to result

idx_2 += 1 # move to next doc of term_2

if not _and: # OR

while idx_1 < self.records[term_1].df: # term_1 has more docs

res.append(self.records[term_1].record[idx_1]) # add to result

idx_1 += 1 # move to next doc of term_1

while idx_2 < self.records[term_2].df: # term_2 has more docs

res.append(self.records[term_2].record[idx_2]) # add to result

idx_2 += 1 # move to next doc of term_2

return res

else: # one of the terms does not exist

raise Exception("Term does not exist")

Optimizing Query Processing

Each Boolean expression can be converted to a conjunctive normal form (CNF), which is a conjunction of disjunctions. For example, cat AND dog OR bird can be converted to (cat AND dog) OR bird. If we record the document frequency of each term, we can merge the terms with the smallest document frequency first, which can reduce the number of documents to be processed.

Index Construction

Term vocabulary is the set of all terms in the collection, already mentioned in Inverted Index. The purpose of constructing the mapping is to replace the string with an integer variable to save space, which is the most common indexing method.

BSBI

SPIMI

Logarithmic merge

Index Compression

Dictionary Compression

Dictionary as a string

Front Coding

Inverted Index Compression

Variable Byte (VB) Encoding

Gamma Encoding

Wildcard Queries

Wildcard queries are queries that contain wildcard characters, such as * and ?. For example, *ing is a wildcard query term that ends with ing. *ing matches sing, bring, singing, bringing, etc. Generally, * matches any number of characters, while ? matches exactly one character. For example, ?ing matches sing, bing, but not singing or bringing. It is easy to query mon* via B-Tree, but it is hard to query *ing via B-Tree. The reason is that mon* is a prefix query, which can be easily queried via B-Tree. However, *ing is a suffix query, which cannot be queried via B-Tree. Thus, a new tree is used to query suffix queries, where all terms are inverted. Then query *ing can be queried as gni*.

Dictionary

The difference bewteen Dictionary and Term vocabulary is that Dictionary refers to the data structure that stores the term vocabulary, while Term vocabulary refers to the set of all terms in the collection. Two common data structures for Dictionary are Hash Table and B-Tree.

Hash Table

In Information Retrieval (IR), a hash table is a data structure that can be used for efficient storage and retrieval of information, particularly in the context of indexing and searching. The basic idea behind a hash table is to use a hash function to map keys (terms, in the case of IR) to indices in an array or table. This allows for quick access to the associated values (documents or postings) using the computed hash.Here’s how a hash table can be applied in the context of IR:

- Hash Function: A hash function takes a key (term) as input and produces a hash code or index as output. The goal is to distribute the keys uniformly across the array to minimize collisions (cases where different keys hash to the same index).

- Array or Table: The hash table consists of an array or table of buckets, each capable of holding one or more key-value pairs. The size of the array is typically chosen based on the expected number of terms and the desired level of efficiency.

- Collision Resolution: Collisions occur when two different keys hash to the same index. There are various techniques for resolving collisions, and common ones include chaining (each bucket contains a linked list of key-value pairs) and open addressing (finding the next available slot in the array).

- Indexing and Retrieval: During the indexing phase, terms are hashed, and the resulting index points to the location in the array where information about the term (such as the list of documents containing the term) is stored.During retrieval, the hash function is applied to the query term, and the resulting index is used to quickly locate the relevant information in the hash table.

- Benefits: Hash tables can offer fast retrieval times when the hash function is well-designed and collisions are effectively handled. They are particularly useful for exact match queries, where the goal is to find documents containing a specific term.

- Considerations: Hash tables may not perform well in scenarios where partial matching or range queries are common, as these operations are not inherently supported by traditional hash functions.

B-Tree

In Information Retrieval (IR), B-trees (Balanced Trees) are a type of self-balancing search tree data structure that can be used for organizing and managing large amounts of data. While B-trees are not as commonly associated with traditional IR models as they are with database systems, they can still play a role in certain aspects of information retrieval, particularly when dealing with large-scale indexing and storage of terms and documents. Here’s an overview of how B-trees can be applied in the context of IR:

- Indexing Terms: B-trees can be employed to create an index structure for terms in a document collection. Each node in the B-tree represents a term, and the tree structure allows for efficient searching, insertion, and deletion of terms.

- Balanced Structure: B-trees maintain a balanced structure, which means that the depth of the tree is kept relatively constant. This balance ensures that search operations have a consistent time complexity, making them efficient for large datasets.

- Sorted Order: B-trees maintain their keys in sorted order, allowing for range queries and efficient sequential access to terms. This can be beneficial in scenarios where queries involve ranges of terms or require ordered traversal.

- Disk-Based Storage: B-trees are well-suited for disk-based storage systems, making them useful in scenarios where the index is too large to fit entirely in memory. They minimize the number of disk I/O operations required for search and retrieval.

- Multiple Keys per Node: B-trees can have multiple keys per node, allowing them to efficiently store and retrieve information associated with a term, such as the list of documents containing the term or other metadata.

- Updates and Inserts: B-trees efficiently handle updates and insertions, ensuring that the tree remains balanced. This is important in IR systems where the document collection is dynamic and frequently updated.

Permuterm Index

K-gram Index

Spell Correction

IR Evaluation

General evaluation methods include:

- Effiency

-

- Time cost

-

- Space cost

-

- Response time

Effectiveness-

- Accuracy: not a good metric because accuracy can be very high when RR is very low and NN is very high, since the number of not relevant documents is usually much larger than the number of relevant documents.

-

- Precision

-

- Recall

-

- F1

-

- MAP

-

- MRR

-

- Bpref

-

- GMAP

-

- NDCG

- Other

-

- Coverage

-

- Diversity

-

- Update Speed

-

- User Satisfaction

Metrics for evaluating IR systems include metrics for single query and metrics for multiple queries.For a given query, the original documents can be categorized as relevant and not relevant. The retrieved documents can be categorized as retrieved and not retrieved. The four categories can be summarized as follows:

| Relevant | Not Relevant | |

|---|---|---|

| Retrieved | True Positive (TP, RR) | False Positive (FP, RN) |

| Not Retrieved | False Negative (FN, NR) | True Negative (TN, NN) |

Metrics are detailed at Evaluation in Data Science.

Issues with the above metrics:

- It is hard to compute

Recallwhen the number of relevant documents is unknown. - It is hard to compare

PrecisionandRecall. For example, if we retrieve all documents, thenRecallis 1, butPrecisionis very low. If we retrieve only one document, thenPrecisioncould be 1, butRecallis very low. Therefore, we need a metric that combinesPrecisionandRecall. - Order of retrieved documents is not considered. Considering the following two cases:

- Case 1: The first 10 documents are relevant, and the rest are not relevant.

- Case 2: The last 10 documents are relevant, and the rest are not relevant.

- In both cases,

PrecisionandRecallare the same, but the first case is better than the second case.

Pooling

Pooling is a method to evaluate IR systems when the number of relevant documents is unknown. The basic idea is to retrieve a large number of documents from different IR systems, and then manually label the top \(K\) documents (union) as relevant or not relevant. Then the metrics can be computed based on the manually labeled documents.

- When \(K\) is small, \(RR_{pooling}<=RR\), because some relevant documents may not be retrieved. The denominator of the precision remains the same and the numerator may become smaller, so the precision may decrease.

- When all retrieved documents are labeled, \(RR_{pooling}=RR\), precision remains the same, but recall may increase because some relevant documents may not be retrieved and the denominator of the recall may become smaller.

Precision versus Recall curve

If retrieved documents are ranked, then Recall monotonically increases as the order increases, while the case of Precision is not clear. Therefore, we can calculate the Recall and the corresponding Precision at the same interval, and then plot the Precision versus Recall curve. The area under the curve is called AUC (Area Under Curve). The larger the AUC, the better the IR system.

There are cases where the point of a particular Recall may not exist, where we need to perform an interpolation operation. For a Recall of \(\%t\), we find the largest Precision that is between \(\%t\) and \(\%t+10\%\), and then use the Precision as the Precision of \(\%t\).

Break point is the point where the Precision is equal to the Recall. The greater the Break point, the better the IR system.

Average Precision (AP)

Average precision averages presicion at different recall points. Let \(R\) be the set of all relevant documents, and \(Rank(R_i)\) be the rank of the \(i\)-th relevant document if \(R_i\) is retrieved, the corresponding presicion is \(P(R_i)=\frac{i}{Rank(R_i)}\), otherwise \(P(R_i)=0\). Then the average precision is defined as follows:

\[AP=\frac{\sum_{i=1}^{|R|}P(R_i)}{|R|}\]Unlike AP, Precision@N is a metric that only considers precision at the top \(N\) documents.

Mean Average Precision (MAP)

Mean average precision is the average of average precision of all queries, including macro MAP and micro MAP. Macro MAP is the average of average precision of all queries, while micro MAP sums up all relevant documents and all retrieved documents, and then computes the average precision.

Mean Reciprocal Rank (MRR)

Mean reciprocal rank is the average of reciprocal rank of all queries. The reciprocal rank of a query only considers the rank of the first relevant document, and the reciprocal rank is defined as follows:

\[RR=\frac{1}{Rank(R_1)}\]Bpref

Bpref (Binary Preference) is a metric that considers the order of retrieved documents. The basic idea is to compare the order of relevant documents and the order of retrieved documents. It penalizes irrelevant documents for ranking ahead of relevant documents. Let \(R\) be the set of all retrieved relevant documents, and \(d_i\) be the \(i\)-th retrieved document,

- \(d_i^r\) is a relevant document

- \(d_i^n\) is a not relevant document

- \(d_i^u\) is an unknown document

Then the Bpref is defined as follows:

\[Bpref=\frac{1}{|R|}\sum_{i=1}^{|R|}(1-\frac{\min(C_{n,r},|R|)}{|R|})\]where \(C_{n,r}\) is the number of not relevant documents that rank ahead of relevant document \(d_i^r\).

GMAP

GMAP considers the stability of the system’s performance on several different queries through geometric means.

\[\begin{aligned} GMAP &= \sqrt[N]{\prod_{i=1}^NAP_i} \\ &= \exp(\frac{1}{N}\sum_{i=1}^N\ln(AP_i)) \end{aligned}\]It is easy to say that the larger the GMAP, the better the system. However, the geometric mean is very sensitive to the value of 0, so the GMAP is not a good metric, compared to MAP.

NDCG

Each document is not just relevant or irrelevant, but has a relevance level, e.g., 0, 1, 2, 3. We can assume that for the returned results,

- Higher relevance is better

- The more highly relevant documents, the better

- The further forward the highly relevant documents, the better

Kappa

Kappa is a metric that considers the agreement between two judges. The basic idea is to compare the agreement between the two judges and the agreement between the two judges and the random agreement. Let \(P_a\) be the agreement between the two judges, \(P_e\) be the random agreement, then the Kappa is defined as follows:

\[\kappa=\frac{P_a-P_e}{1-P_e}\]| yes | no | total | |

|---|---|---|---|

| yes | \(a\) | \(b\) | \(a+b\) |

| no | \(c\) | \(d\) | \(c+d\) |

| total | \(a+c\) | \(b+d\) | \(a+b+c+d\) |

TF-IDF

TF-IDF (Term Frequency - Inverse Document Frequency) is a weighting scheme that is used to evaluate how important a word is to a document in a collection or corpus. The intuition behind TF-IDF is that a word is important if it appears frequently in a document, but not frequently in the whole collection. The TF-IDF of a word \(w\) in a document \(d\) is defined as follows:

\[TFIDF(w,d)=TF(w,d)*IDF(w)\]where \(TF(w,d)\) is the term frequency of \(w\) in \(d\), and \(IDF(w)\) is the inverse document frequency of \(w\), which is defined as follows:

\[IDF(w)=\log\frac{N}{n_w}\]where \(N\) is the total number of documents in the collection, and \(n_w\) is the number of documents that contain \(w\).

Sometimes, \(TF(w,d)\) is replaced by \(1+\log(TF(w,d))\) to avoid the problem of long documents.

Vector Space Model

The simplest way to represent a document is to use a vector, where each dimension represents a word, and the value of the dimension represents the frequency/existence of the word in the document, which is called Bag of Words (BOW). We can also use TF-IDF to represent a document.

The great thing about the vector space model is that we can use the cosine similarity to measure the similarity between a query and a document, or between two documents. The cosine similarity is defined as follows:

\[\cos(\theta)=\frac{\vec{q}\cdot\vec{d}}{|\vec{q}||\vec{d}|}\]where \(\vec{q}\) is the query vector, and \(\vec{d}\) is the document vector.

Usually, vectors are normalized to unit vectors (\(\vert\vec{q}\vert=\vert\vec{d}\vert=1\)), so the cosine similarity can be simplified as follows:

\[\cos(\theta)=\vec{q}\cdot\vec{d}\]Feedback

Feedback and query expansion are two ways to improve the recall of IR systems. The essence of relevance feedback is to use the relevance determination of the retrieved document (determination originated manually or non-manually) as return information in the hope of improving retrieval results. Feedback can be divided into:

-

User feedbackorexplicit feedback: The user manually determines the relevance of the retrieved document. -

Implicit feedback: The system automatically determines the relevance of the retrieved document based on the user’s behavior. -

Pseudo feedbackorblind feedback: The system directly assumes that the first k returned documents are relevant, and then provides feedback without user intervention.

Rocchio Algorithm

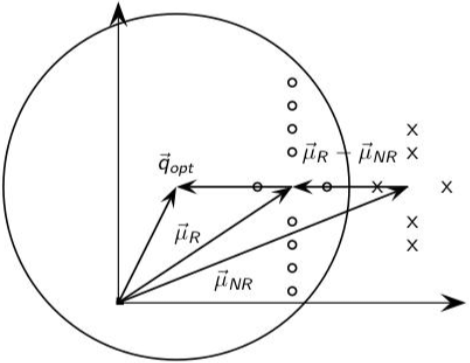

Since documents are represented as vectors, we can use the Rocchio algorithm to improve the query vector.

Let \(\vec{d}\) be the document vector, \(\vec{\mu}(D)\) be the centroid of documents:

\[\vec{\mu}(D)=\frac{1}{|D|}\sum_{\vec{d}\in D}\vec{d}\]Rocchio algorithm tries to find the query vector that makes the largest difference in similarity between the query and the set of relevant/irrelevant documents.

\[\vec{q}_{opt}=argmax_{\vec{q}}\left[sim(\vec{q},\vec{\mu}(R))-sim(\vec{q},\vec{\mu}(N))\right]\]where \(R\) is the set of relevant documents, and \(N\) is the set of irrelevant documents.

Some additional assumptions can be made to simplify the formula:

\[\vec{q}_{opt} = \vec{\mu}(R)+\left[\vec{\mu}(R)-\vec{\mu}(N)\right]\]

This approach eliminates the overhead of searching and is very effective. In practice, we generally use the following formula:

\[\vec{q}_{opt} = \alpha\vec{q_0}+\beta\vec{\mu}(R)-\gamma\vec{\mu}(N)\]where \(\vec{q_0}\) is the original query vector, and \(\alpha\), \(\beta\), and \(\gamma\) are the positive weights.

Assumptions about relevant feedback

- The relevant documents are similar to each other.

- The initial query should be close to the relevant documents.

Query Expansion

Probabilistic Retrieval

Define three random variables \(R\), \(Q\), and \(D\), where \(R = {0,1}\), \(Q\) is a query, and \(D\) is a document, and the similarity between the query and the document can be measured by calculating conditional probabilities \(P(R=1\vert Q=q,D=d)\).

\[P(R=1|Q=q,D=d) + P(R=0|Q=q,D=d) = 1\]Logistic Regression

\[\log\frac{P(R=1|Q=q,D=d)}{P(R=0|Q=q,D=d)} = \beta_0 + \sum_{i=1}\beta_if_i(q,d)\]where \(f_i(q,d)\) is the \(i\)-th feature of the query and the document.

\[P(R=1|Q=q,D=d) = \frac{1}{1+e^{-(\beta_0 + \sum_{i=1}\beta_if_i(q,d))}}\]Logistic regression is based on rigorous mathematical derivations, but feature selection is difficult and the choice of feature functions is very subjective.

Binary Independence Model (BIM)

The assumptions of the BIM model are based on the Bayesian theorem:

\[\begin{aligned} \log \frac{P(R=1|D=d)}{P(R=0|D=d)} &= \log \frac{P(D=d|R=1)P(R=1)}{P(D=d|R=0)P(R=0)} \\ &\propto \log \frac{P(D=d|R=1)}{P(D=d|R=0)} \end{aligned}\]where \(P(D\vert R)\) is the probability of generating a document \(d\) given that the document is relevant or not relevant. It can be assumed that the lexical items of relevant/irrelevant documents with satisfy some overall distribution, then document \(D\) can be generated by sampling. Two distributions are used to model the generation of documents:

- Multi-variate Bernoulli distribution: The document is generated by sampling the

presenceorabsenceof each word. -

- \[P(w_1,w_2,...,w_n|R=1)=\prod_{i=1}^{|T|}P(t_i|R=1)^{t_i \in d}(1-P(t_i|R=1))^{t_i \notin d}\]

- Multinomial distribution: The document is generated by sampling the

frequencyof each word. -

- \[P(w_1,w_2,...,w_n|R=1)=\prod_{i=1}^{|D|}P(t_i|R=1)^{f_i}\]

where \(t_i\) is the \(i\)-th term in the vocabulary, \(f_i\) is the frequency of the \(i\)-th term in the document, and \(\vert T\vert\) is the size of the vocabulary, and \(\vert D\vert\) is the set of all terms in the document.

Multi-variate Bernoulli distribution is used to model the generation of documents in the BIM model.

\[\begin{aligned} \log \frac{P(R=1|Q=q,D=d)}{P(R=0|Q=q,D=d)} &\propto \log \frac{P(D=d|R=1)}{P(D=d|R=0)} \\ &= \log \frac{\prod_{i=1}^{|T|}P(t_i|R=1)^{t_i \in d}(1-P(t_i|R=1))^{t_i \notin d}}{\prod_{i=1}^{|T|}P(t_i|R=0)^{t_i \in d}(1-P(t_i|R=0))^{t_i \notin d}} \\ &= \sum_{i=1}^{|T|}\left[e_i\log\frac{P(t_i|R=1)}{P(t_i|R=0)} + (1-e_i)\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}\right] \\ &= \sum_{i=1}^{|T|}\left[e_i\log\frac{P(t_i|R=1)}{P(t_i|R=0)} + \log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}-e_i\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}\right] \\ &= \sum_{i=1}^{|T|}\left[e_i(\log\frac{P(t_i|R=1)}{P(t_i|R=0)}-\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}) + \log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}\right] \\ &= \sum_{i=1}^{|T|}\left[e_i\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))} + \log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}\right] \\ &= \sum_{i=1}^{|D|}\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))} + \sum_{i=1}^{|T|}\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)} \\ &= \sum_{i=1}^{|Q\cap D|}\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))} + \sum_{i=1}^{|D-Q|}\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))} + \sum_{i=1}^{|T|}\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)} \\ \end{aligned}\]Here,\(e_i\) means \(t_i\) is in the document, and \(e_i\)=1, otherwise \(e_i\)=0.

\[\sum_{i=1}^{|T|}\log\frac{1-P(t_i|R=1)}{1-P(t_i|R=0)}\]is a constant, and for those terms not in \(Q\),

\[\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))}=0\], so the only quantity to estimate is

\[\sum_{i=1}^{|Q\cap D|}\log\frac{P(t_i|R=1)(1-P(t_i|R=0))}{P(t_i|R=0)(1-P(t_i|R=1))}\]| Relevant Documents (\(R_i\)) | Irrelevant Documents (\(N-R_i\)) | |

|---|---|---|

| \(t_i \in D\) (\(n_i\)) | \(r_i\) | \(n_i-r_i\) |

| \(t_i \notin D\) (\(N-n_i\)) | \(R_i-r_i\) | \(N-R_i-n_i+r_i\) |

Specifically, \(P(t_i\vert R=1)\) and \(P(t_i\vert R=0)\) can be estimated (smoothing MLE) as follows:

\[P(t_i|R=1)=\frac{r_i}{R_i}=\frac{r_i+0.5}{R_i+1}\] \[P(t_i|R=0)=\frac{N-r_i}{N-R_i}=\frac{N-r_i+0.5}{N-R_i+1}\]The search does not initially have a collection of relevant and irrelevant documents, and the assumptions can be made that \(P(t_i\vert R=1)\) is a constant, and \(P(t_i\vert R=0)=\frac{n_i}{N}\), which means that the more frequently a term appears in the collection, the less likely it is to be relevant.

The final calculation boils down to retrieval status value:

\[\begin{aligned} c_t &= \log\frac{p_t(1-q_t)}{q_t(1-p_t)} \\ &= \log\frac{p_t}{1-p_t}-\log\frac{q_t}{1-q_t} \\ &= \log\frac{(r_i+0.5)}{(R_i-r_i+0.5)}-\log\frac{(n_i-r_i+0.5)}{(N-R_i-n_i+r_i+0.5)} \\ &= \log\frac{(r_i+0.5)(N-R_i-n_i+r_i+0.5)}{(R_i-r_i+0.5)(n_i-r_i+0.5)} \end{aligned}\]where \(c_t\) is odds ratio of term \(t\). If \(c_t\) is positive, then the term is more likely to be relevant, otherwise it is more likely to be irrelevant. For a query with multiple terms, the final score is the sum of the scores of all terms.

The disadvantage of the BIM model is that it does not take into account factors such as TF, document length, etc., and there is an assumption of word-item independence, which is essentially an idf weighting formula.

BM25

\[w(t,d)=\frac{qtf}{k_3+qtf}\frac{k_1tf}{k_1((1-b)+b\frac{L_d}{L_{avg}})+tf}\log_2\frac{N-df+0.5}{df+0.5}\]where \(qtf\) is the term frequency in the query, \(tf\) is the term frequency in the document, \(df\) is the document frequency of the term, \(L_d\) is the length of the document, \(L_{avg}\) is the average length of the document, \(N\) is the total number of documents, \(k_1\), \(k_3\), and \(b\) are parameters.

language Model

Query Likelihood Model

\[\begin{aligned} P(D|Q)&=\frac{P(Q|D)P(D)}{P(Q)} \\ &\propto P(Q|D)P(D) \\ \end{aligned}\] \[\begin{aligned} RSV(Q,D)&=P(Q|D)\\ &=P(q_1,q_2,...,q_n|D)\\ &=P(q_1|D)P(q_2|D)...P(q_n|D)\\ &=\prod_{t\in Q}P(t|D)^{tf(t,Q)}\\ \end{aligned}\]Thus the retrieval problem is transformed into estimating the probability of a document generating a query, that is, finding all \(P(t\vert D)\), which can be estimated by the maximum likelihood estimation (MLE):

\[P(t|D)=\frac{tf(t,D)}{|D|}\]where \(\vert D\vert\) is the length of the document or the sum of the frequencies of all terms in the document.

To avoid the problem of zero probability, several smoothing methods are used:

- Jelinek-Mercer (JM), \(P(t\vert C)\) equals to the frequency of \(t\) in the document collection divided by the total number of terms in the collection, \(0\leq\lambda\leq 1\).

-

- \[P(t\vert D)=\lambda P_{ML}(t\vert D)+(1-\lambda)P(t\vert C)\]

- Dirichlet Prior (Dir), \(\mu\geq 0\)

-

- \[P(t\vert D)=\frac{tf(t,D)+\mu P(t\vert C)}{\vert D\vert+\mu}\]

- Absolute Discounting (Abs), \(0\leq\delta\leq 1\)

-

- \[P(t\vert D)=\frac{\max((tf(t,D)-\delta),0)}{\vert D\vert}+\frac{\delta\vert D_{unique}\vert}{\vert D\vert}P(t\vert C)\]